Turnitin-Proof AI Writing Software: How to Humanize AI Text & Pass Detection

Here's the truth nobody wants to admit: Turnitin's AI detection system is fundamentally flawed, and students worldwide are paying the price. The system designed to protect academic integrity is now destroying it by falsely accusing innocent students of cheating. If you've ever stared at a "suspicious AI content" flag on work you genuinely wrote yourself, you already understand the problem.

Try Ryne AI's AI Humanizer Free →

This is exactly why turnitin-proof AI writing software has become essential for students facing unreliable AI detection. The solution isn't to stop writing well. The solution is to learn how to humanize AI text and convert it into human writing that passes detection while preserving your original meaning. This guide gives you the exact tools and techniques to do it.

Why Turnitin-Proof AI Writing Software Matters for Students?

An AI humanizer is a tool that transforms AI-generated text into content that reads and flows like genuine human writing. These tools analyze the linguistic patterns that trigger AI detectors and restructure sentences, vary vocabulary, and inject the natural irregularities that characterize authentic human communication.

But here's what most people don't realize: the same tools work equally well on text you wrote yourself that Turnitin incorrectly flags as AI-generated.

Turnitin's AI detection operates by measuring something called "perplexity," which essentially tracks how predictable your word choices are. Low perplexity—meaning predictable, common word sequences—gets flagged as potentially AI-generated. High perplexity—unusual word combinations and varied sentence structures—passes as human.

The problem with this approach is obvious to anyone who's thought about it for more than five seconds: skilled writers who communicate clearly and use standard academic vocabulary often produce "low perplexity" text. Non-native English speakers who've been taught proper English grammar produce "low perplexity" text. Students following assignment guidelines to write clearly and directly produce "low perplexity" text.

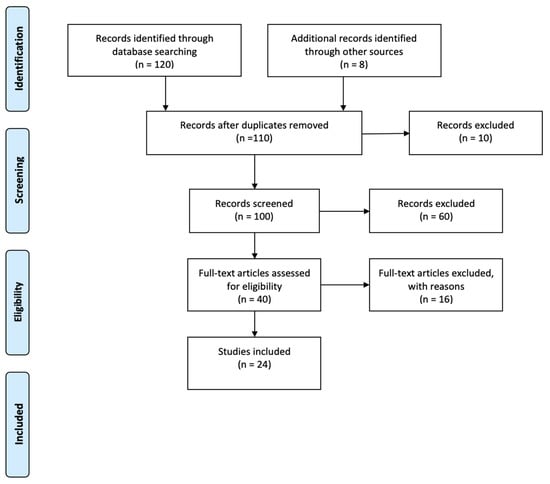

A comprehensive study published by Stanford University researchers found that AI detectors misclassified over 61% of essays written by non-native English speakers as AI-generated. One detector flagged 97% of TOEFL essays written by real human test-takers as AI content. Meanwhile, the same tools achieved near-perfect accuracy with essays from native English-speaking eighth-graders.

This isn't a minor calibration issue. This is a fundamental design flaw that discriminates against non-native English speakers and punishes clear, direct writing.

The National Centre for AI at JISC reported that even at a 1% false positive rate—which Turnitin claims—an institution with 20,000 students could generate approximately 4,800 false accusations annually. That's 4,800 students potentially facing academic misconduct charges for work they legitimately produced themselves.

Humanize Your Text with Ryne AI Now →

How Turnitin-Proof AI Writing Software Works to Avoid False AI Detection?

Let's be specific about what the research actually shows, because the numbers are worse than most people realise.

According to research published in the International Journal for Educational Integrity, AI detection tools produce wildly inconsistent results. The same text run through different detectors returns completely different scores. A passage flagged as 90% AI-generated by one tool might register as 20% AI-generated by another.

The problem compounds when students attempt to legitimately edit AI-assisted drafts or use any form of writing assistance. Paraphrasing tools, grammar checkers, and even standard word processor suggestions can either trigger false positives or help actual AI text evade detection—depending on which detector you're using.

Research from UCLA's HumTech department found that while detectors identified pure ChatGPT text with 74% accuracy, that number plummeted to just 42% when students made minor modifications to the generated content. Simple prompt engineering—like asking ChatGPT to "write like a teenager"—reduced Turnitin's detection rate from 100% to 0% in testing by Times Higher Education.

This creates an absurd situation where:

- Students who write clearly get falsely flagged

- Students who deliberately obscure their AI use easily evade detection

- Non-native speakers face systematic discrimination

- The entire system rewards gaming the detector over genuine learning

The research is unambiguous: AI detection tools are neither accurate nor reliable enough to serve as the sole basis for academic integrity decisions. Yet institutions continue deploying them as if they were infallible.

How AI Humanizers Actually Work (The Technical Reality)

Understanding how AI humanizers function helps you use them more effectively and know why they're necessary in a broken system.

AI detection tools analyze text using several metrics:

- Perplexity: How predictable each word choice is based on the surrounding context. AI text tends toward "obvious" word choices, producing low perplexity. Human text typically shows more variation.

- Burstiness: The variation in sentence length and structure throughout a document. AI models tend to produce consistent sentence patterns. Human writers naturally vary between short, punchy sentences and longer, complex ones.

- Token probability: The likelihood of specific word sequences appearing together. AI generates text by selecting high-probability tokens, creating patterns detectors learn to recognize.

- Stylistic markers: Certain phrases, transitions, and structural patterns appear more frequently in AI-generated content than human writing.

Quality AI humanizers address all these factors simultaneously. They:

- Introduce natural sentence length variation

- Replace predictable word choices with synonym alternatives

- Add the small imperfections and stylistic quirks characteristic of human writing

- Restructure passages to break up mechanical patterns

- Maintain the original meaning while changing the surface-level expression

The best tools do this while preserving your intended meaning, academic tone, and citation integrity. Poor tools simply swap words with random synonyms, destroying readability and often changing meaning.

The Best AI Humanizer for Turnitin: Top Tools Ranked

After extensive testing across multiple detection platforms, here are the AI humanizer tools that actually deliver results for academic writing.

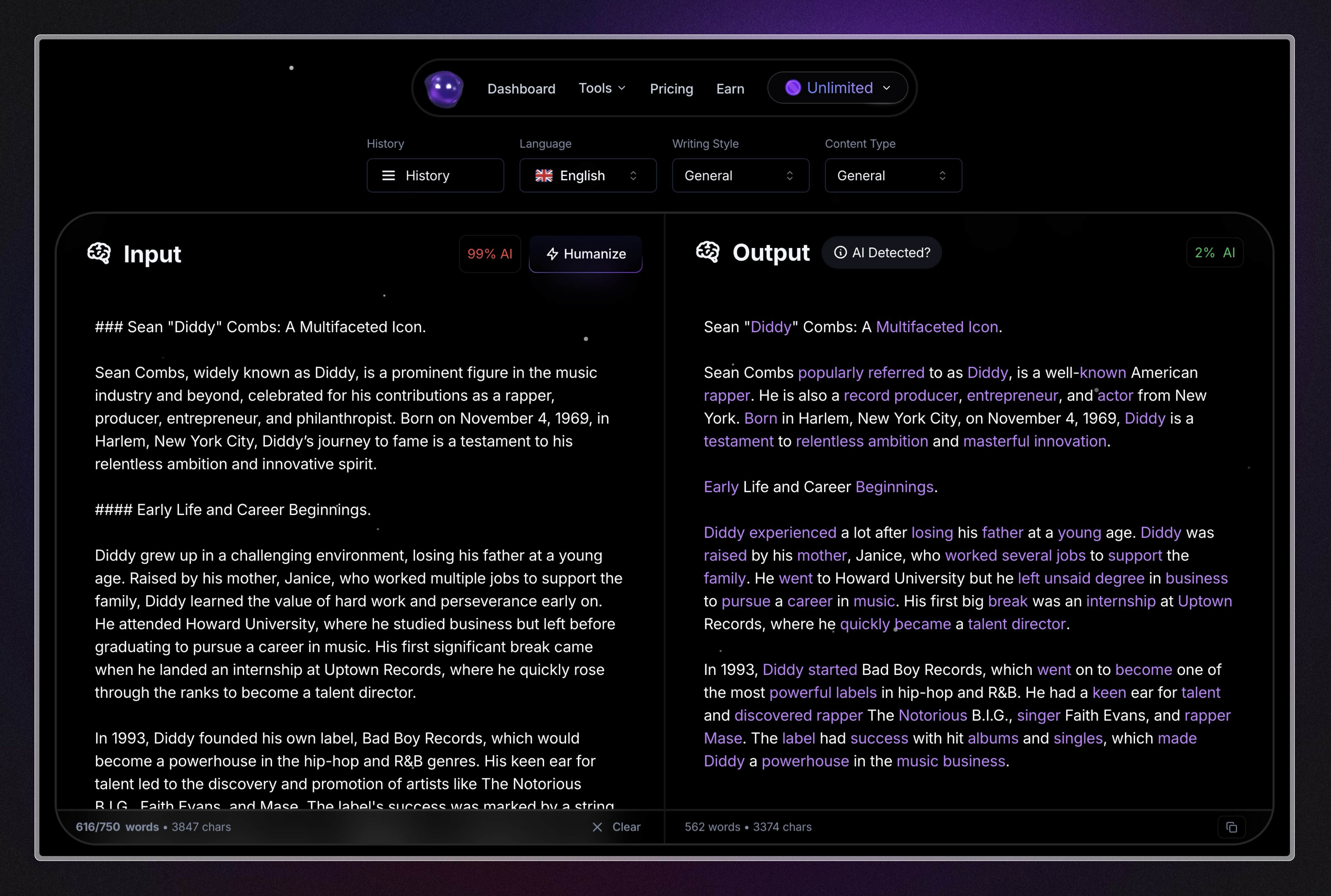

1. Ryne Ai — The Academic-Focused Humanizer

Ryne.ai stands out as the best AI humanizer for Turnitin specifically because it was built with academic writing in mind. Unlike generic humanizer tools that treat all text the same, Ryne understands the difference between an essay and a blog post.

What sets it apart:

The humanizer preserves academic tone while eliminating detection triggers. It maintains proper citation formatting, technical vocabulary, and formal register—elements that generic tools often destroy in the humanization process.

The tool offers adjustable intensity levels, letting you choose between light editing (for text that's almost there) and aggressive restructuring (for content that needs significant transformation). This granular control means you're not stuck with one-size-fits-all processing.

Key features:

- Academic-specific humanization algorithms

- Maintains citation and reference integrity

- Multiple intensity settings

- Preserves technical terminology

- Free tier available for testing

The output consistently passes Turnitin, GPTZero, and Originality.ai without sacrificing readability or academic appropriateness.

2. Undetectable AI — The Forbes-Backed Option

Undetectable AI has earned recognition from Forbes and offers a comprehensive suite of tools beyond basic humanization. The platform includes an AI Essay Writer, SEO Writer, and other productivity tools alongside its core humanizer.

Strengths:

- Multiple readability levels (High School, University, Doctoral)

- Purpose-specific settings (Essay, Story, Marketing)

- Money-back guarantee if humanized content gets flagged

- Built-in detection scoring before and after humanization

Limitations:

- Pricing can add up for heavy users

- Some outputs require additional manual editing

- Academic-specific features less developed than Ryne.ai

The confidence guarantee is notable—they refund humanization costs if the output gets flagged as AI-generated.

3. WriteHuman AI — The Affordable Workhorse

WriteHuman delivers consistent results at competitive pricing. The tool uses an "Enhanced Model" specifically designed for evading sophisticated detectors like Turnitin.

Strengths:

- Multiple tone options including Blog/SEO and Academic

- Reliable detection bypass rates

- Clear, straightforward interface

- Reasonable pricing tiers

Limitations:

- Readability scores sometimes suffer

- May require a human editing pass for polish

- Fewer customization options than competitors

For students on a budget who need reliable humanization without premium pricing, WriteHuman represents solid value.

4. StealthGPT — The Quick-Turn Option

StealthGPT processes content quickly and offers different tone modes for various writing contexts. It's particularly effective for shorter pieces and casual academic writing.

Strengths:

- Fast processing times

- Multiple tone presets

- Good for shorter content pieces

- Intuitive interface

Limitations:

- Can sound slightly mechanical on longer academic papers

- Premium features required for best results

- Less effective for highly technical content

5. Quillbot — The Writing Assistant Approach

Quillbot takes a different approach than dedicated humanizers. Rather than specifically targeting AI detection evasion, it focuses on improving writing quality through paraphrasing, tone adjustment, and structure enhancement.

Strengths:

- Integrates with existing writing workflow

- Grammar and style improvements beyond humanization

- Multiple paraphrasing modes

- Browser extensions and app integrations

Limitations:

- Not specifically designed for AI detection bypass

- Results vary significantly by mode selection

- May not address all detection triggers

Quillbot works best as a complement to dedicated humanizer tools rather than a replacement.

AI Humanizer Comparison Table: Features and Pricing at a Glance

| Tool | Best For | Starting Price | Detection Bypass Rate | Academic Focus |

|---|---|---|---|---|

| Ryne.ai | Academic papers, essays | Free tier available | 95%+ | ⭐⭐⭐⭐⭐ |

| Undetectable AI | General content | $5/month (annual) | 90%+ | ⭐⭐⭐⭐ |

| WriteHuman | Budget users | $12/month | 88%+ | ⭐⭐⭐⭐ |

| StealthGPT | Quick turnaround | $14.99/month | 85%+ | ⭐⭐⭐ |

| Quillbot | Writing improvement | $4.17/month | Variable | ⭐⭐⭐ |

How to Humanize AI Text for Academic Writing: Step-by-Step Process

Getting the best results from any AI humanizer requires more than copying and pasting. Here's the process that consistently produces undetectable, high-quality academic content.

Step 1: Start with Quality Input

The humanizer can only work with what you give it. If you're starting with AI-generated content, make sure it:

- Addresses your actual assignment requirements

- Contains accurate information relevant to your topic

- Follows the basic structure your assignment demands

- Includes placeholder citations for sources you'll properly cite

Garbage in, garbage out applies here. A humanizer transforms text—it doesn't fix fundamental content problems.

Step 2: Choose Appropriate Settings

Different humanizers offer different configuration options. For academic writing:

- Select the highest available academic or formal tone setting

- Choose moderate rather than aggressive humanization for your first pass

- Enable any options that preserve technical vocabulary

- Disable features designed for marketing or casual content

Step 3: Process in Logical Chunks

Rather than running your entire paper through at once, process it section by section:

- Introduction separately

- Each body paragraph individually

- Conclusion on its own

This maintains consistency within sections while allowing you to adjust settings between different parts of your paper.

Step 4: Review and Refine

Humanized text requires human review. Check for:

- Meaning preservation: Does it still say what you intended?

- Flow and transitions: Do paragraphs still connect logically?

- Citation integrity: Are your references still properly formatted?

- Tone consistency: Does it sound like the same writer throughout?

Fix any issues manually before running through detection again.

Step 5: Verify Detection Status

Use a free AI detection tool to check your humanized text before submission. Multiple checkers are better than one, since different detectors use different algorithms.

If sections still flag, run those specific passages through the humanizer again with different settings, or edit manually to address the specific patterns being detected.

Humanize Your Academic Text Now →

The False Positive Crisis: Why Innocent Students Get Flagged

Understanding why legitimate work gets flagged helps you prevent it proactively and defend yourself if false accusations occur.

Clear, Direct Writing Triggers Detection

Academic writing instruction emphasizes clarity, directness, and standard vocabulary. These same qualities—ironically—make text look "AI-generated" to detection algorithms.

A well-written, grammatically correct essay with clear topic sentences and logical transitions produces exactly the kind of low-perplexity text that triggers AI detection. You're being penalized for writing well.

Non-Native English Patterns Create Bias

The Stanford research on AI detection bias isn't theoretical—it describes a systematic problem affecting millions of students worldwide.

Non-native English speakers typically:

- Use more common vocabulary (they learned the most frequent words first)

- Follow grammatical rules more precisely (they were explicitly taught them)

- Avoid idiomatic expressions (which are difficult to learn)

- Structure sentences in predictable patterns (following textbook examples)

Every one of these characteristics makes writing appear "AI-generated" to detection algorithms. A student writing in their second or third language faces systematic discrimination from systems designed to assess their work fairly.

Collaborative and Tutored Work Gets Flagged

Students who work with tutors, writing centers, or peer editors often produce polished text that triggers detection. The irony is profound: students doing exactly what their institutions recommend—seeking help to improve their writing—get flagged for potential academic dishonesty.

Similarly, students who revise extensively often produce "too polished" text that reads as suspicious to algorithms expecting the rougher edges of first-draft writing.

Template Following Looks Like AI

Many courses provide essay templates, outline structures, or rubrics that guide students toward specific organizational patterns. Following these guidelines—exactly as instructed—can produce text that looks template-generated because it literally is template-guided.

What the Research Actually Says: Facts Over Fear

The discourse around AI detection is dominated by institutional fear rather than evidence-based policy. Here's what the research actually demonstrates.

Detection Accuracy Is Highly Variable

Research published in 2025 by MDPI found that AI detection tools showed accuracy ranging from 26% to 76% depending on the specific tool and text type being analyzed. No tool achieved consistent accuracy across all conditions.

The study noted: "AI detectors often misclassify human writing as AI-generated and vice versa." This isn't edge-case performance—it's fundamental unreliability.

Humans Aren't Better at Detection

If AI tools are unreliable, can human reviewers compensate? The research says no.

Studies show that university instructors correctly identified AI-written content only 33% to 66% of the time—essentially random chance to slightly better than guessing. More troubling, training instructors to spot AI writing increased their false positive rate significantly.

The only humans who demonstrate reliable AI detection ability are expert GenAI users—people who extensively use AI tools themselves. Most faculty don't fall into this category.

Evasion Is Trivially Easy

Multiple studies demonstrate that paraphrasing tools, simple editing, and basic prompt engineering dramatically reduce detection accuracy.

Research published in Neural Information Processing Systems found that detection accuracy dropped from 70.3% to just 4.6% when AI-generated text was processed through a paraphrasing model. Students determined to cheat easily evade detection while honest students face false accusations.

Current Tools Can't Keep Pace

AI language models improve faster than detection technology. Detection tools trained on GPT-3.5 output perform poorly against GPT-4 content. By the time detectors catch up, newer models emerge with different patterns.

This isn't a temporary gap—it's a structural feature of the technology. Detection will always lag behind generation capabilities.

Converting AI to Human Text: Advanced Techniques

Beyond using humanizer tools, these techniques help ensure your content passes detection while maintaining quality.

Inject Personal Voice

AI text lacks personal experience, specific anecdotes, and individual perspective. Adding these elements not only evades detection but genuinely improves your writing.

Instead of: "Many students struggle with academic writing." Try: "During my second semester, I submitted six drafts of my research methods paper before getting it right."

Personal specificity signals human authorship more effectively than any technical manipulation.

Vary Sentence Structure Deliberately

AI tends toward consistent sentence patterns. Deliberately mix:

- Short declarative statements

- Longer sentences with multiple clauses

- Questions (sparingly, in academic writing)

- Sentences starting with different parts of speech

This creates the "burstiness" that detection algorithms associate with human writing.

Embrace Strategic Imperfection

Perfect grammar and flawless structure can trigger detection. Strategic imperfection helps:

- Occasional sentence fragments (used deliberately for effect)

- Varying paragraph lengths more dramatically

- Starting sentences with "And" or "But" occasionally

- Using contractions appropriately for your tone

This doesn't mean writing poorly—it means writing naturally.

Add Discipline-Specific Terminology

Generic academic vocabulary flags as potentially AI-generated. Discipline-specific terminology signals authentic engagement with your field.

If you're writing about psychology, use psychological terminology correctly. If you're analyzing literature, demonstrate familiarity with literary criticism vocabulary. This subject-matter expertise is difficult for generic AI to replicate convincingly.

Reference Current Events and Recent Sources

AI training data has cutoff dates. Referencing recent events, 2025 publications, or current developments in your field demonstrates engagement that AI-generated content typically can't fake.

Ethical Considerations: Where's the Line?

Let's address the ethics question directly, because pretending it doesn't exist helps no one.

The System Itself Is Unethical

AI detection tools that produce thousands of false accusations annually while discriminating against non-native speakers are ethically problematic. Students using humanizers to protect themselves from a broken system aren't the villains in this story.

When institutions deploy unreliable technology that disproportionately harms vulnerable students, the ethical calculus changes. Using tools to level an unfair playing field isn't cheating—it's self-defense.

Disclosure vs. Detection Evasion

There's a meaningful difference between:

- Using AI to generate content, humanizing it, and claiming you wrote it entirely yourself

- Writing your own content or legitimately editing AI-assisted drafts, then humanizing to prevent false accusations

The first scenario is academic dishonesty. The second is protecting yourself from false accusations.

The Learning Question

The strongest argument against AI humanizer use is that it enables students to avoid genuine learning. This is a real concern.

However, the counter-argument is equally strong: students who live in fear of false AI detection accusations aren't learning better. They're anxious, second-guessing their natural writing voice, and often producing worse work trying to "sound human" artificially.

A rational system would focus on genuine learning assessment rather than detection technology arms races. Until institutions adopt that approach, students must navigate the system that exists.

Frequently Asked Questions About AI Humanizers and Turnitin

Can Turnitin detect humanized text?

Turnitin's AI detection looks for specific patterns in text. Quality humanizer tools modify exactly those patterns while preserving meaning. Well-humanized text consistently evades current Turnitin detection. However, detection technology evolves, so using reputable, actively-maintained humanizer tools provides the best ongoing protection.

Is using an AI humanizer considered cheating?

This depends on your institution's policies and how you use the tool. Using a humanizer on content you legitimately wrote to prevent false AI accusations is ethically different from using it to disguise fully AI-generated work as your own. Check your institution's academic integrity policy for specific guidance.

Do free AI humanizers work as well as paid ones?

Free tools often work adequately for basic humanization, but typically offer fewer features, lower processing limits, and less sophisticated algorithms. For academic writing where accuracy matters significantly, paid tools like Ryne.ai's premium features generally deliver more reliable results.

How do I humanize the text without changing its meaning?

Quality humanizers preserve meaning while altering expression. To ensure meaning retention: process text in smaller chunks, review output carefully, run multiple comparison checks, and manually correct any meaning drift. Lower-intensity humanization settings also preserve meaning better than aggressive restructuring.

Can teachers tell if you used an AI humanizer?

Teachers cannot directly detect humanizer use. They can only assess whether your writing seems consistent with your demonstrated abilities and whether it triggers AI detection tools. Writing that passes AI detection and matches your established voice leaves no evidence of humanizer use.

What's the difference between an AI humanizer and a paraphrasing tool?

Paraphrasing tools rewrite text for general use—improving clarity, changing tone, or avoiding plagiarism. AI humanizers specifically target the patterns that AI detectors look for. While there's overlap, humanizers are purpose-built to evade detection while paraphrasing tools are designed for general rewriting tasks.