Turnitin Proof AI Text Generator Tools: Complete Guid

Turnitin flags your AI text. Your grade drops. And suddenly, a tool that was supposed to save you time just cost you your semester.

That is the reality for millions of students and writers who use AI text generators without understanding how Turnitin's detection actually works — or which tools genuinely produce content that reads as human. The market is flooded with turnitin proof AI text generator tools that promise undetectable output, but most of them are selling you a fantasy built on outdated detection logic.

This guide dismantles the noise. You will learn exactly how Turnitin flags AI content, which AI text generator tools actually produce writing that passes, which ones are burning your money, and how to humanize the text you create so it reads the way it should: like a real human wrote it.

How Turnitin's AI Detection Actually Works

Before you can understand which turnitin proof AI text generator tools are worth your attention, you need to understand what you are up against.

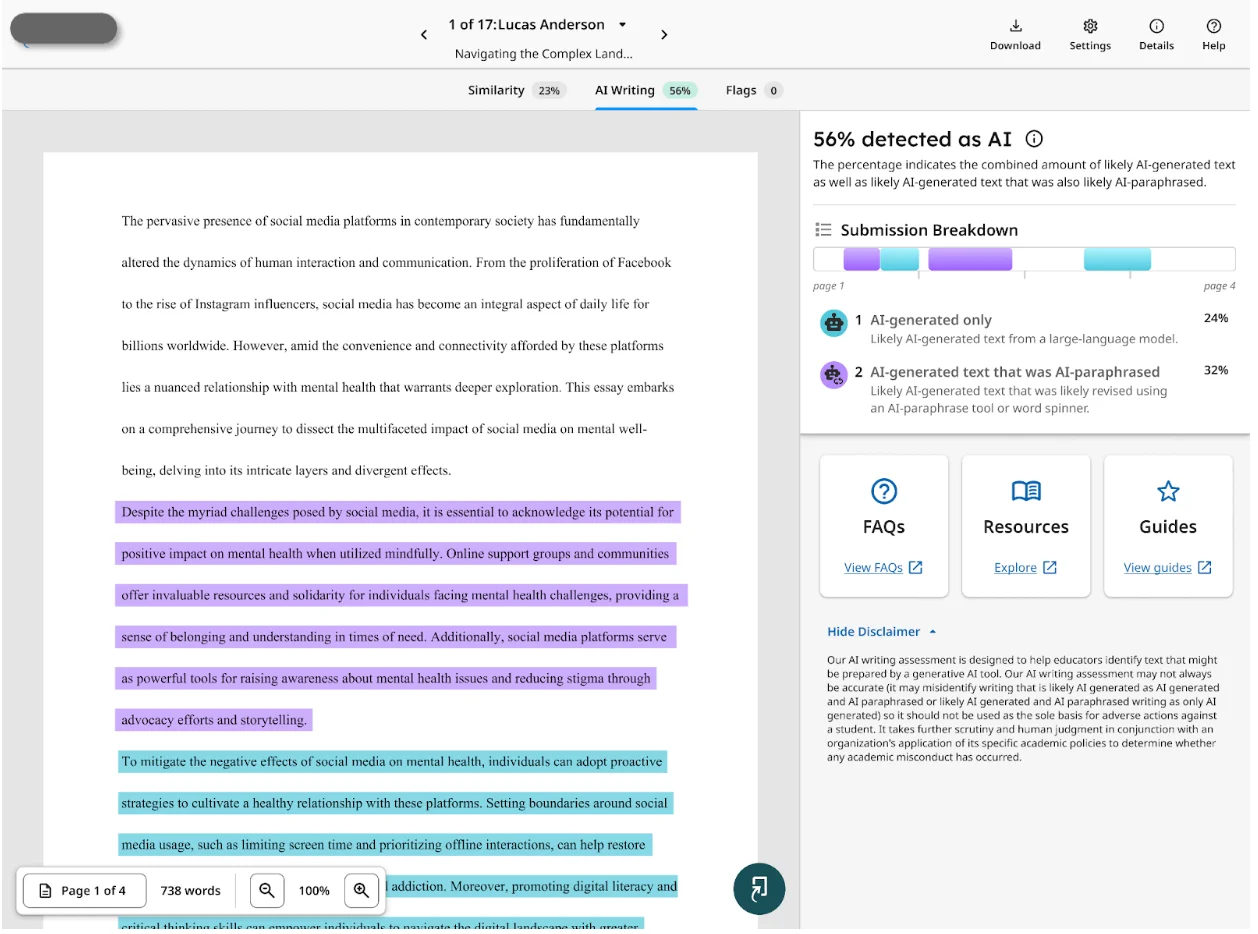

Turnitin's AI detector does not look for plagiarism in the traditional sense. It is not matching your sentences against a database of existing papers. Instead, it analyzes the statistical patterns of your writing, specifically, how predictable your words are in sequence.

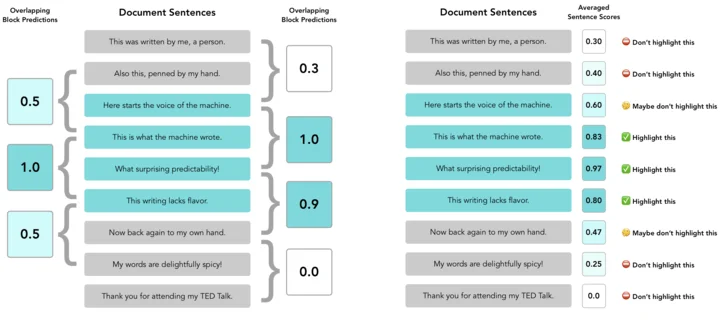

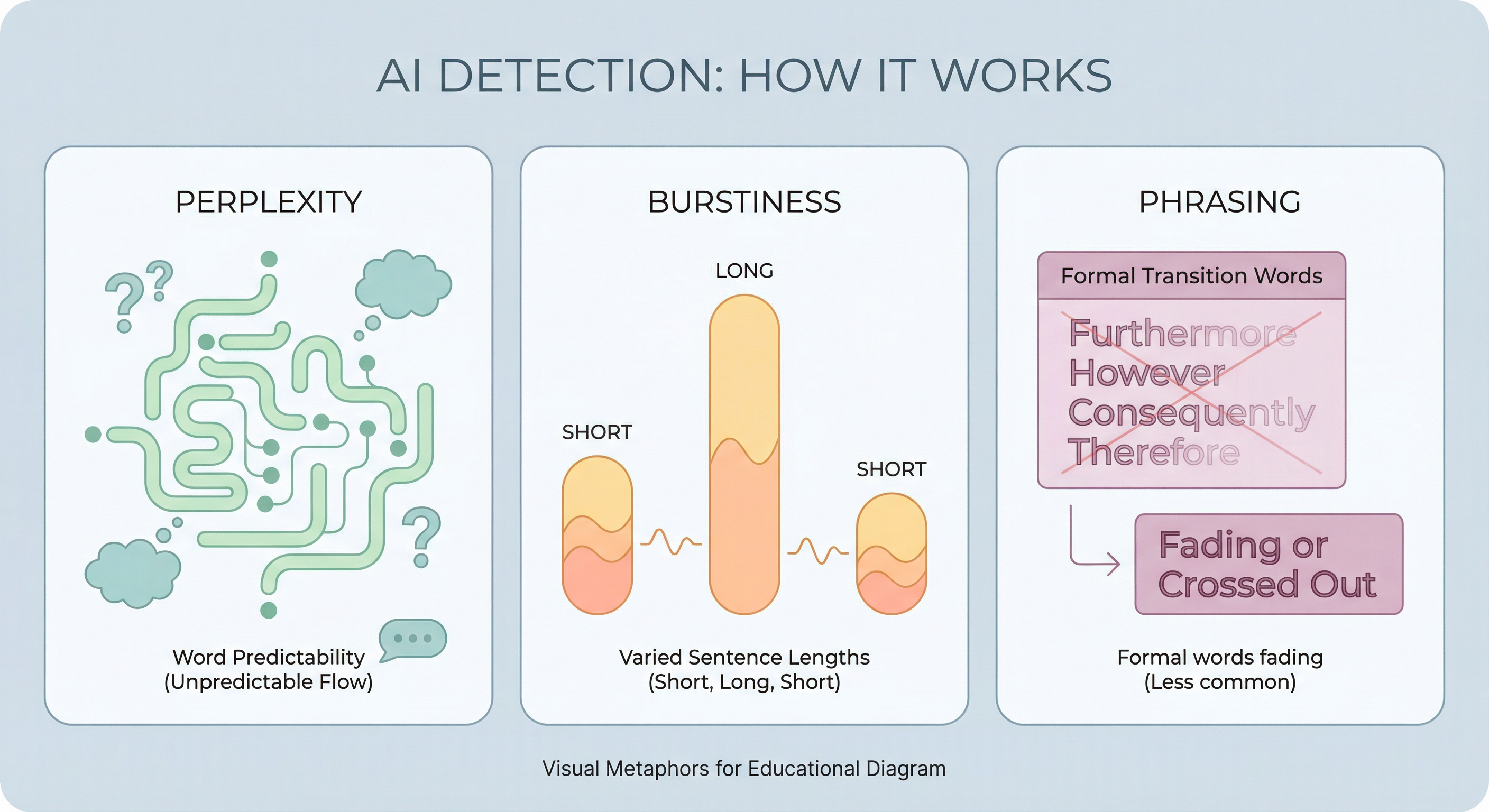

The system breaks your document into overlapping segments of roughly 5 to 10 sentences. Each segment gets scored based on two key metrics: perplexity and burstiness.

Perplexity measures how "surprising" or unpredictable a sequence of words is. Human writing tends to be more unpredictable — we make odd word choices, vary our rhythms, insert personality. AI-generated text, by contrast, defaults to the most statistically probable word choices, producing text that is almost too clean, too consistent, too predictable.

Burstiness measures sentence variation. Humans write in bursts, a long complex sentence followed by a short punchy one, then a medium one loaded with qualifiers. AI tends to flatten this pattern, producing sentences of remarkably uniform length and structure.

Turnitin then compiles these scores into an overall AI Writing percentage and uses color-coding (blue for likely human, red for likely AI) to flag specific sections. The system requires a minimum of 300 words to generate a reliable score.

Here is the critical part most people miss: Turnitin's AI detection is not infallible. Testing by Times Higher Education showed that through simple prompt engineering asking ChatGPT to write like a teenager researchers pulled off a complete ChatGPT detector bypass, reducing Turnitin's detection rate from 100% to 0%.

A comprehensive 2023 study published in the International Journal for Educational Integrity by Weber-Wulff et al. found that none of the 14 AI detection tools tested — including Turnitin — scored above 80% accuracy. Machine-paraphrased AI text dropped detection accuracy to just 26%.

The takeaway: Turnitin is beatable. Not through some magic button, but through tools and methods that genuinely change AI language to human language at the structural level.

What Makes an AI Text Generator "Turnitin Proof"?

Not every tool that claims to bypass AI detection actually does. The label "turnitin proof" gets thrown around by tools that do nothing more than swap synonyms or rearrange sentence fragments. That is not humanizing, that is shuffling deck chairs on a sinking ship.

A genuinely Turnitin-proof AI text generator does one or more of the following:

-

The tool increases the perplexity of the output. It introduces word choices and sentence structures that are statistically less predictable, which is the hallmark of real human writing.

-

It introduces natural burstiness. Instead of producing paragraph after paragraph of identically structured sentences, the output varies in rhythm, length, and complexity — the way an actual person writes.

-

It removes AI fingerprints at the token level. Advanced language models leave detectable statistical signatures in how they select tokens. An effective AI humanizer disrupts these patterns without destroying the meaning of the text.

-

It preserves context and coherence. Anyone can scramble a sentence into gibberish and call it "undetectable." The real test is whether the output still makes sense, sounds natural, and communicates the intended idea clearly.

When you are evaluating turnitin proof AI text generator tools, these are the criteria that separate the tools that work from the ones that just take your money.

The Top Turnitin Proof AI Text Generator Tools Compared

Let's cut through the marketing claims and look at what actually delivers. Here is a breakdown of the most talked-about tools that claim to produce AI text that passes Turnitin, along with a clear-eyed assessment of each.

1. QuillBot

What it does: QuillBot is primarily a paraphrasing tool that offers multiple rewriting modes (Standard, Fluency, Formal, Academic, etc.). It rephrases sentences while attempting to retain the original meaning.

The reality: QuillBot is solid for basic paraphrasing tasks — cleaning up phrasing, adjusting tone, improving readability. But it was not designed to bypass AI detection. Its output still tends to follow predictable linguistic patterns that Turnitin's system can flag.

Multiple user reports confirm that running AI text through QuillBot alone does not reliably drop Turnitin's AI detection score to safe levels. The tool works at the surface level — swapping words and restructuring phrases — without addressing the deeper statistical markers that detectors are actually measuring.

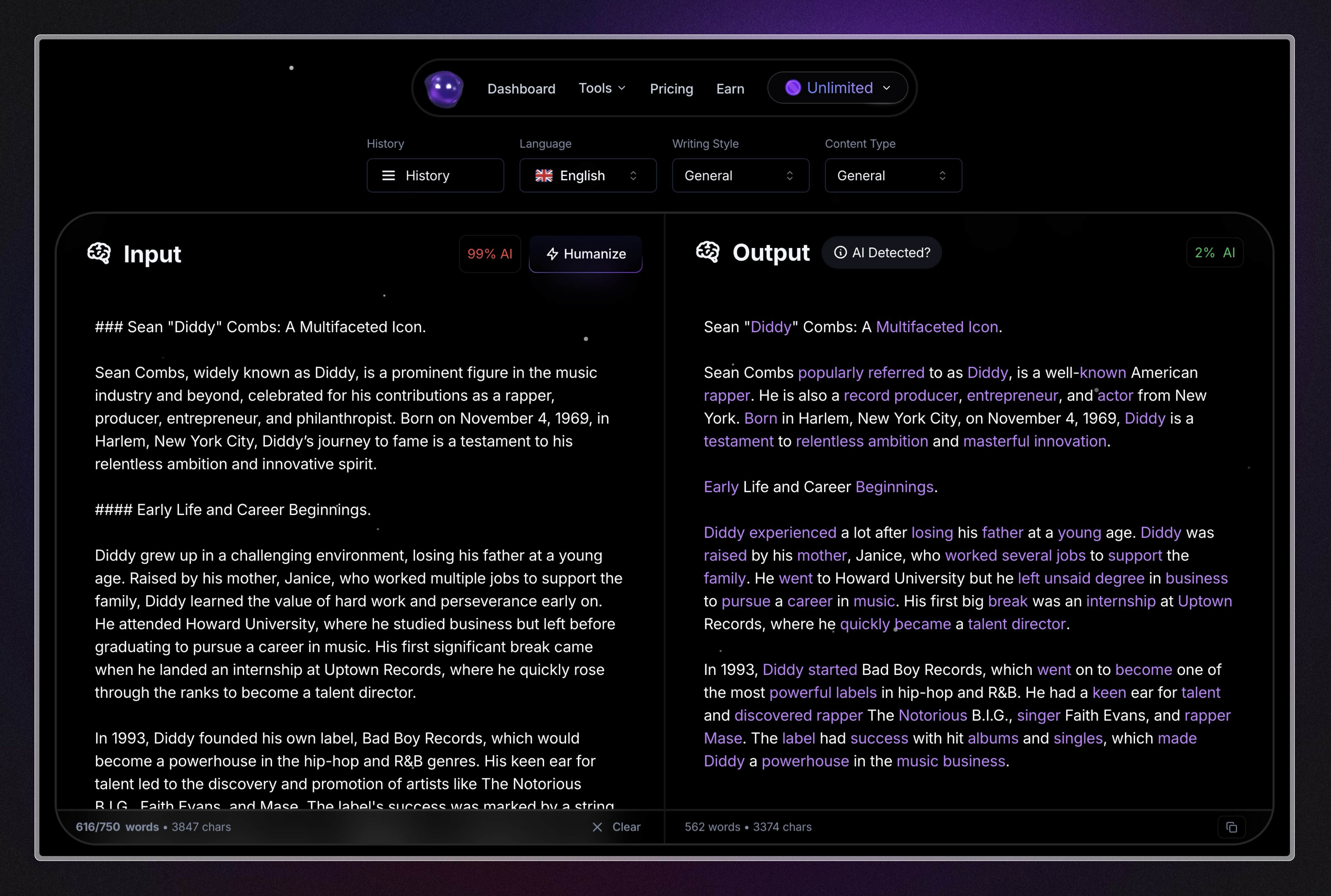

2. Undetectable AI

What it does: Undetectable AI markets itself as a tool that rewrites AI content specifically to bypass detection systems. It scans the text against multiple detectors before outputting a "humanized" version.

The reality: The concept is solid, but execution varies. Some users report success with short-form content, while longer academic papers often come back with inconsistent results. The tool can also alter meaning in ways that require significant manual editing afterward.

For academic writing where precision matters, that is a serious drawback. It does well in certain use cases, but reliability at scale remains a question mark.

3. WriteHuman

What it does: WriteHuman focuses on converting AI text to human text by targeting detection-specific patterns. It claims to work against Turnitin, GPTZero, and other major detectors.

The reality: WriteHuman delivers mixed results. Shorter texts tend to perform better than longer academic essays. The output sometimes reads stiffly — as if the tool is trying too hard to sound human, which ironically makes it sound less human. It is worth testing for specific use cases, but it is not a reliable all-purpose solution for academic work.![]()

4. Humanizer.com

What it does: Humanizer.com is a dedicated AI-to-human text conversion tool that focuses on rewriting AI-generated content to bypass detection systems. It offers a straightforward interface where you paste your AI text and receive a humanized version designed to evade tools like Turnitin, GPTZero, and Originality.ai.

The reality: Humanizer.com performs reasonably well on short to mid-length content. The output reads more naturally than basic paraphrasing tools, and it does attempt to address sentence structure variation rather than just swapping synonyms.

However, results become inconsistent with longer academic papers. Citations and technical terminology sometimes get altered or stripped during the rewriting process, which creates extra editing work for students submitting formal essays or research papers.

It is a step above free one-click paraphrasers, but it still falls short on the consistency and citation preservation that academic writing demands. Worth testing for shorter assignments, but not a set-it-and-forget-it solution for serious coursework.

5. Ryne AI Humanizer

What it does: Ryne's AI Humanizer takes AI-generated text and converts it into natural, human-sounding writing that bypasses AI detection systems including Turnitin. Rather than simple paraphrasing, it restructures at the sentence, rhythm, and vocabulary levels to fundamentally change the statistical profile of the text.

Why it is worth noting: Ryne was built with students and academic writing in mind. The tool focuses on the exact metrics that Turnitin measures — perplexity and burstiness — and adjusts them to mirror genuine human writing patterns.

It also preserves citations, which is a pain point with many other tools that strip or mangle references during the rewriting process. A free tier makes it accessible without requiring a paid commitment upfront.

Why Simple Paraphrasing Does Not Beat Turnitin

This is where most people get it wrong. It is worth spending time on because this misunderstanding is costing students grades.

Paraphrasing — swapping words, rearranging clauses, changing passive voice to active — is what most cheap turnitin proof AI text generator tools rely on. And it does not work reliably. The reason is straightforward.

Turnitin's AI detection model was not built to detect specific phrases. It was built to detect patterns. When you paraphrase AI text, you change the surface-level words, but the underlying statistical pattern of the writing — the perplexity score, the burstiness profile, the token prediction probability — often stays the same or close to it.

Research confirms this. The Weber-Wulff et al. study tested machine-paraphrased AI text against 14 detection tools and found that while paraphrasing did reduce detection rates, it was wildly inconsistent. Some tools still caught it, and the accuracy varied dramatically by document.

The Stanford Human-Centered AI Institute found an even more troubling issue: AI detectors misclassified over 61% of essays written by non-native English speakers as "AI-generated." This suggests these tools are also biased against certain writing styles.

The point: paraphrasing is not humanizing. To truly convert AI to human text that survives Turnitin, you need a tool that operates at the statistical pattern level — not just the vocabulary level. That is the difference between a basic paraphraser and a dedicated AI humanizer that can bypass AI detection.

How to Actually Use AI Text Generators Without Getting Flagged

Let's get practical. Here is the workflow that actually works for producing AI-assisted content that passes Turnitin's checks — not just sometimes, but consistently.

-

Start with your own outline and thesis. AI is a writing accelerator, not a writing replacement. If you hand ChatGPT a blank prompt and submit whatever it spits back, you deserve to get caught. Build your argument structure, define your thesis, identify your sources.

-

Use AI for drafting, not for final copy. Generate a rough draft using your preferred AI text generator. Use it to flesh out sections, overcome writer's block, or explore how to structure a complex argument. But treat this output as raw material, not a finished product.

-

Run the draft through a dedicated AI humanizer. This is the critical step. A quality AI humanizer takes that AI-generated draft and fundamentally restructures it so the statistical fingerprint matches human writing. This is not paraphrasing — this is a from AI to human conversion at the pattern level.

-

Add your personal voice and course-specific details. Reference your professor's lecture points. Cite the specific readings assigned in your syllabus. Mention class discussions. These details are impossible for any AI to fabricate and they signal genuine engagement with the material.

-

Edit manually one final time. Read the piece aloud. Vary your sentence lengths. Add a personal anecdote or observation. Inject the imperfections and personality that make writing unmistakably human.

-

Keep your drafts. Save every version — the outline, the AI draft, the humanized version, the final edit. If your work is ever questioned, having a documented process is your strongest defense.

This workflow is not about cheating a system. It is about using AI as the tool it was designed to be — while understanding that the system you are operating within has rules, and those rules penalize laziness, not intelligence.

The Ethics Question: Where the Line Actually Is

Every conversation about turnitin proof AI text generator tools eventually arrives here. So let's address it directly.

Using AI in your academic workflow is not inherently unethical. Turnitin's own research found that 88% of students have used generative AI in assignments. The most popular uses are explaining concepts (58%), summarizing articles (48%), and suggesting research ideas (41%).

Only 18% reported using AI-generated text directly in assessments. The data shows that most students use AI to supplement their learning, not to subvert it.

Every university handles academic integrity AI policies differently, but the ethical line is clear: if your institution prohibits AI-generated text in submissions and you submit AI-generated text without disclosure, that is academic dishonesty. Full stop.

But if your course allows AI-assisted writing, or if you are using AI to draft and then substantially reworking and humanizing the text into your own voice and argument — you are operating within the norms that the majority of universities are moving toward.

The problem is that Turnitin's detection creates collateral damage. The Stanford study referenced earlier demonstrated that AI detectors are biased against non-native English speakers, with a 61.3% false positive rate on TOEFL essays. That means students who wrote their work entirely by hand — in their second or third language — are being falsely accused of using AI.

UCLA declined to adopt Turnitin's AI detection feature specifically because of these concerns about accuracy and false positives.

An AI humanizer in this context is not a cheating tool. For many students, it is a fairness tool — a way to ensure that their legitimate writing is not mischaracterized by a flawed detection system. Understanding whether Turnitin can detect humanized AI is not about gaming the system. It is about protecting yourself within a system that is far from perfect.

What to Look For When Choosing a Turnitin Proof AI Text Tool

Not all tools are built the same. Here is what separates the legitimate options from the noise when you are evaluating turnitin proof AI text generator tools.

-

Pattern-level rewriting, not synonym swapping. If the tool only changes words, it is a paraphraser, not a humanizer. You need a tool that restructures sentences, varies rhythm, and disrupts the statistical patterns that detectors flag.

-

Academic writing support. Many humanizer tools are built for marketing copy and blog posts. Academic writing has different conventions — citations, formal structure, discipline-specific terminology. A tool that strips out your citations or mangles technical vocabulary is useless for university work.

-

Transparency about what it does. Avoid tools that market themselves with vague promises of "100% undetectable" output. No tool guarantees that. The honest tools tell you what they change, how they change it, and what the limitations are.

-

Free tier or affordable pricing. Students are not running enterprise budgets. A tool that locks everything behind a $50/month paywall is not serving the people who need it most.

-

Consistent results on academic-length content. Many tools perform well on 200-word paragraphs and fall apart at 2,000 words. Test with actual academic-length content before committing.

The Comparison: How Leading Tools Stack Up

| Feature | QuillBot | Undetectable AI | WriteHuman | Humanizer.com | Ryne AI Humanizer |

|---|---|---|---|---|---|

| Designed for academic writing | ❌ No | Partial | Partial | Partial | ✅ Yes |

| Pattern-level humanizing | ❌ Synonym-level | ✅ Yes | ✅ Yes | Partial | ✅ Yes |

| Free tier available | ✅ Limited | ❌ No | ❌ No | ✅ Limited | ✅ Yes |

| Preserves citations | Partial | ❌ Often strips | Partial | ❌ Inconsistent | ✅ Yes |

| Consistent on long-form content | ❌ Inconsistent | Partial | ❌ Inconsistent | ❌ Inconsistent | ✅ Yes |

| Built to bypass Turnitin | ❌ Not designed for this | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

Each tool has its niche. QuillBot is fine for general paraphrasing. Undetectable AI works for short-form content. WriteHuman is worth testing case by case.

But for academic writing specifically — where citations, long-form consistency, and pattern-level rewriting all matter — Ryne checks every box.

What the Research Actually Says About AI Detection Accuracy

If you are going to rely on turnitin proof AI text generator tools, you should understand the research landscape that shapes this entire conversation.

The Weber-Wulff et al. (2023) study — the most comprehensive independent test of AI detection tools to date — was published in the International Journal for Educational Integrity. It tested 14 tools against 54 documents with known origins.

Their conclusion: "The available detection tools are neither accurate nor reliable and have a main bias towards classifying the output as human-written rather than detecting AI-generated text." Even the best-performing tool (Turnitin) scored below 80% accuracy.

The Liang et al. (2023) study from Stanford, published in Patterns, demonstrated that GPT detectors are biased against non-native English writers. The average false positive rate was 61.22% on TOEFL essays. Seven different detectors unanimously misidentified 18 out of 91 TOEFL essays as AI-generated.

Simple prompting (asking GPT to "elevate" the language) reduced this bias, but the fundamental flaw remains.

A 2025 article from UCLA's HumTech division cataloged these failures. It noted that even OpenAI shut down its own AI detector because it only correctly identified 26% of AI-written text while falsely flagging 9% of human writing.

This is not a detection system operating with surgical precision. It is a probability engine with documented biases and significant error rates. Understanding this reality is essential context for any student or professional using AI writing tools.

It is also why an effective AI humanizer is not a luxury — it is increasingly a necessity for anyone producing content in environments where these detectors are used.

Frequently Asked Questions

Does Turnitin detect AI-generated text from ChatGPT?

Yes, Turnitin can detect text generated by ChatGPT and similar large language models. It analyzes statistical patterns like perplexity and burstiness rather than matching against a database. However, research shows its accuracy is below 80%, and simple prompt engineering has reduced detection rates to 0% in controlled tests.

Can QuillBot bypass Turnitin's AI detection?

QuillBot is not designed to bypass AI detection and does not reliably reduce Turnitin's AI scores. It operates at the synonym and sentence-restructuring level, which does not address the deeper statistical patterns Turnitin measures. For consistent results, you need a dedicated AI humanizer that works at the pattern level.

What is the best AI humanizer for Turnitin?

The best AI humanizer for Turnitin is one that restructures text at the perplexity and burstiness levels, preserves academic citations, and delivers consistent results on long-form content. Based on those criteria, Ryne's AI Humanizer is a strong option — it offers pattern-level rewriting and a free tier for students.

Are AI detection tools biased against non-native English speakers?

Yes. A Stanford study by Liang et al. (2023) found that AI detectors misclassified over 61% of essays written by non-native English speakers as AI-generated. Seven detectors unanimously flagged 18 out of 91 TOEFL essays incorrectly. This documented bias is a significant concern in academic settings.

Is using an AI humanizer considered cheating?

It depends on your institution's policies. If your school prohibits AI-generated text and you submit humanized AI content without disclosure, that could violate academic integrity rules. However, if AI-assisted writing is permitted, using a humanizer to refine drafts is within acceptable norms. Many students also use humanizers to protect legitimately hand-written work from false positives.

How accurate is Turnitin's AI detection?

According to the Weber-Wulff et al. (2023) study, Turnitin scored below 80% accuracy when tested against 54 documents with known origins. Machine-paraphrased AI text dropped detection accuracy to just 26%. Even OpenAI discontinued its own AI detector due to a 74% failure rate in correctly identifying AI-written text.

Conclusion

Turnitin's AI detection is a probability system — not a perfect one. It flags patterns, not intent, and the research is clear that its accuracy falls well short of the certainty it implies.

For students and writers using AI as part of their workflow, the real task is not to trick a detector. It is to produce work that genuinely reflects human thought, voice, and effort.

The tools covered in this guide range from basic paraphrasers to pattern-level humanizers, and the gap between them matters. Surface-level word swapping will not protect your work. What does work is understanding how detection operates, choosing a tool built to address it at the right level, and layering in your own thinking and edits on top.

If you are looking for a place to start, Ryne's free AI Humanizer is built for exactly this — academic-grade humanizing that restructures AI text at the statistical level without stripping your meaning or citations. Run your draft through it, edit the output in your own voice, and submit with confidence.

Your writing should be judged by what you said — not by what an algorithm guessed.