Why is my essay detected as AI?

Here's the truth nobody wants to tell you: AI detectors are broken. They're flagging your legitimate work as machine-generated because these tools are fundamentally flawed, biased, and operating on guesswork. You wrote every word yourself, yet some algorithm decided you're a cheater.

The system is rigged against you—and we're about to expose exactly why.

The Detection Disaster: Why Your Human Writing Gets Flagged

AI detectors don't actually "detect" AI. They guess. They analyze patterns, sentence structures, and word choices, then make probabilistic bets about whether a human or machine wrote your text. The problem? Humans often write exactly like AI expects humans to write—especially when following academic guidelines.

Recent research from Stanford University reveals the ugly truth: GPT detectors are systematically biased against non-native English speakers. The study found that these detectors frequently misclassify non-native English writing as AI-generated, with some detectors flagging over 60% of TOEFL essays written by non-native speakers as AI-generated—essays written years before ChatGPT even existed.

A 2025 study from Arizona State University tested multiple AI detectors on verified human-written essays. The results? Even the "best-performing" detectors produced false positive rates between 1.3% and 5%. That means for every 100 genuine essays, up to 5 students get falsely accused. In a class of 500? That's 25 innocent students branded as cheaters.

The Five Reasons Your Essay Triggers False Alarms

1. You Write Too Well (Yes, Really)

AI detectors flag "perfect" writing. Clear structure, proper grammar, logical flow—the exact qualities your professors demand—make you look suspicious. The detection algorithms expect human writing to be messier, more chaotic, with occasional errors. When you proofread thoroughly and organize your thoughts coherently, you're literally punished for excellence.

Students who follow academic writing conventions religiously—topic sentences, supporting evidence, conclusions—create patterns that mirror AI output. The machine doesn't see a diligent student; it sees another machine.

2. You're Not a Native English Speaker

This is where the bias gets criminal. Stanford researchers discovered that AI detectors disproportionately flag non-native English speakers as using AI. Why? Non-native speakers often write with more formal structures, simpler sentence constructions, and standardized vocabulary—exactly what AI models produce.

The detectors interpret careful, deliberate language choices as "robotic." Your effort to write clearly in your second language becomes evidence against you. The system doesn't recognize linguistic diversity; it only recognizes deviation from native English patterns as suspicious.

3. You Used Common Academic Phrases

"In conclusion," "Furthermore," "This essay will explore"—these phrases doom you. AI detectors have learned that ChatGPT loves these transitions. But guess what? So does every academic writing guide ever published. You're following the rules you were taught, using the exact phrases your professors expect, and the AI detector treats standard academic language as a red flag.

The detection algorithms can't distinguish between a student who learned proper essay structure and an AI that was trained on millions of properly structured essays. The overlap is massive, and you're caught in the crossfire.

4. Your Topic Is Too Common

Write about climate change, Shakespeare, or cell biology? Congratulations, you've entered the danger zone. AI models have been trained on thousands of essays about these topics. When you write about well-covered subjects, your original thoughts inevitably echo existing content that AI has absorbed and regurgitates.

The detector sees familiar concepts explained in familiar ways and assumes AI involvement. It doesn't matter that you researched independently, formed your own conclusions, or spent hours crafting your argument. Common topics produce common language patterns, and common patterns trigger false positives.

5. You Revised Too Much

Here's the ultimate irony: the more you polish your writing, the more suspicious you look. Multiple drafts, careful editing, peer review—all the practices of good writing—strip away the human quirks that detectors look for. Your revised essay becomes smoother, more logical, more consistent—more "artificial."

Tools like Grammarly make this worse. They standardize your writing, fixing inconsistencies and smoothing rough edges. You're using technology to improve your human writing, but detectors see the technological fingerprint and cry foul.

The Technical Truth: How Detection Actually Works (And Fails)

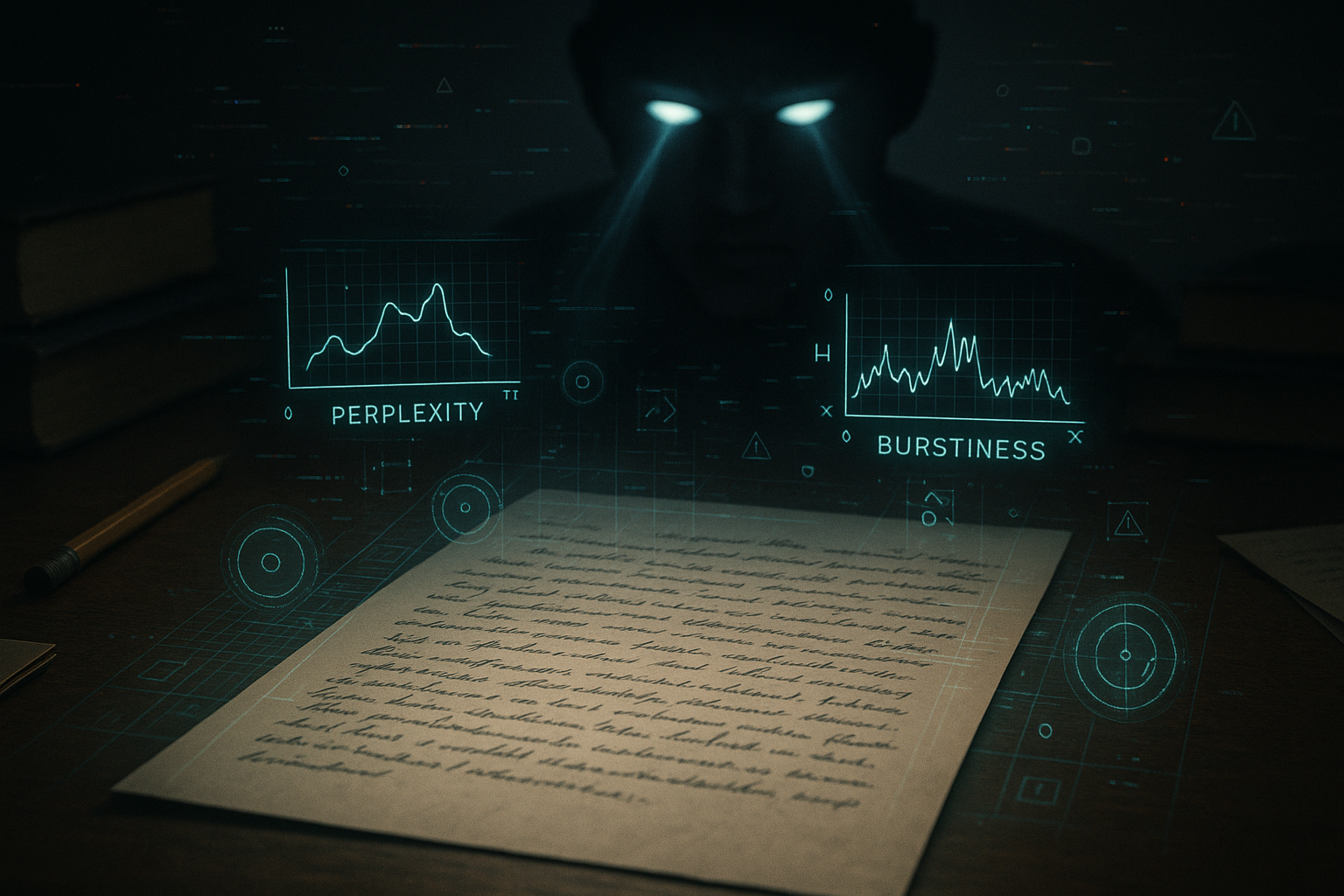

AI detectors analyze two main factors: perplexity and burstiness. Perplexity measures how predictable your text is—lower perplexity suggests AI. Burstiness examines sentence variety—uniform sentences suggest AI. Here's the problem: good academic writing often has low perplexity (it's clear and logical) and low burstiness (it maintains consistent style).

These tools use statistical models to compare your writing against patterns they've learned. But they're comparing your work against a moving target. As AI models evolve, detection tools scramble to keep up. What triggered detection last month might pass today, and what passes today might fail tomorrow.

The University of San Diego's Legal Research Center found that detection tools produce high rates of both false positives and false negatives. Turnitin admits their tool misses roughly 15% of AI-generated text to avoid false positives—yet still maintains a 1% false positive rate. That's thousands of students worldwide falsely accused.

The Institutional Failure: Why Schools Keep Using Broken Tools

Universities know these tools are flawed. The research is clear, the bias documented, the failure rates published. Yet they deploy them anyway. Why? Because the alternative—actually reading and evaluating student work—requires time and effort they won't invest.

These institutions chose the lazy path: automated accusation over human judgment. They'd rather risk destroying innocent students' academic careers than admit they can't actually detect AI use reliably. The burden of proof has shifted—you're guilty until proven innocent, and the proof of innocence is nearly impossible to provide.

Some professors proceed with caution, understanding the limitations. But many treat detector results as gospel, unaware that they're wielding a broken weapon. The power dynamic is completely skewed—a flawed algorithm's guess can override your honest work.

The Real Solution: Take Control of Your Narrative

Your academic reputation is on the line, much like how anyone's online presence can be damaged by false accusations or misinformation. Just as reputation management professionals understand the importance of controlling your narrative online, you should take control of your academic narrative before false detection claims harm your future.

Stop playing defense in a rigged game. The detection tools are broken, the system is biased, and waiting for fairness is futile. You need tools that work for you, not against you.

This is where Ryne's AI Humanizer changes everything. Instead of hoping your genuine writing doesn't trigger false positives, you ensure it won't. Our humanizer doesn't just evade detection—it enhances your authentic voice while eliminating the patterns that trigger false alarms.

We're not teaching you to cheat; we're protecting you from false accusations. Your original ideas, your research, your arguments—preserved and protected from algorithmic discrimination. The humanizer maintains your message while adding the natural variations that distinguish human writing from both AI output and the rigid patterns that trigger false positives.

The Practical Playbook: Protecting Yourself Now

Document Everything

Screenshot your writing process. Save drafts with timestamps. Record your research sources. When falsely accused, evidence of your process becomes your defense. The burden of proof shouldn't be yours, but until the system changes, protect yourself.

Understand Your Rights

Most institutions have appeals processes for academic integrity violations. Know them before you need them. Understand what evidence they accept, what standards they use, and what recourse you have. Don't wait until accusation to learn the rules.

Use Multiple Checkers

No single detector is reliable. Before submitting, run your work through multiple detection tools. If different tools give different results (they will), you have evidence of the system's unreliability. Document these inconsistencies—they're your ammunition against false accusations.

Write Strategically

Add personal anecdotes. Include specific, unique examples. Vary your sentence structure deliberately. Reference recent events that postdate AI training data. These elements don't guarantee safety, but they reduce false positive risk.

But here's the thing—you shouldn't have to write defensively. You shouldn't modify your natural style to appease broken algorithms. That's why Ryne's Humanizer exists. Write naturally, then protect yourself.

The Bigger Picture: This Is About Power, Not Plagiarism

AI detection isn't about academic integrity—it's about control. Institutions want easy solutions to complex problems. They want to outsource judgment to algorithms rather than invest in real evaluation. They want to appear tough on cheating without doing the actual work of teaching and assessment.

You're collateral damage in their theater of accountability. Your genuine work, your honest effort, your academic future—all sacrificed to maintain the illusion that they can control AI use. They can't. These tools don't work. The research proves it.

The Stanford study's authors were blunt: these detectors are "unreliable and easily gamed." The Arizona State research showed that using multiple detectors in aggregate only slightly improves accuracy—and still produces false positives. The system is fundamentally broken.

The Resistance Strategy: Turn Their Tools Against Them

Here's what they don't want you to know: the same technology causing these problems can solve them. AI humanization isn't cheating—it's self-defense against a broken system. When the game is rigged, you don't play fair; you play smart.

Ryne's AI Humanizer doesn't generate content for you. It protects your content from false flags. Your ideas, your research, your voice—preserved and protected. We're not helping you cheat; we're preventing you from being cheated by a system that's already failed you.

The institutions chose unreliable automation over human judgment. They chose efficiency over accuracy. They chose to risk your future rather than fix their broken systems. You don't owe loyalty to a system that treats you as guilty until proven innocent.

The Future Reality: This Problem Gets Worse Before It Gets Better

AI models are evolving faster than detection tools can adapt. What works today fails tomorrow. The arms race between generation and detection is accelerating, and you're caught in the middle. Every semester brings new detection tools, new false positive rates, new innocent victims.

Universities won't abandon these tools quickly. They've invested money, reputation, and policy in detection technology. Admitting failure means admitting they can't actually detect AI use—undermining their entire academic integrity framework. They'll double down before they back down.

Your choice is simple: hope the broken system doesn't randomly select you for false accusation, or take control of your academic narrative. Hope isn't a strategy. Protection is.

The Bottom Line: Stop Being a Victim

You didn't create this problem. You're not responsible for fixing it. But you are responsible for protecting yourself from it. The research is clear: AI detectors are biased, unreliable, and getting worse. False positives aren't rare accidents—they're systematic failures.

Every essay you submit is a roll of the dice. Will your legitimate work trigger false alarms? Will your careful writing, your non-native English, your common topics, or your thorough editing make you look guilty? Why gamble with your academic future?

Ryne's AI Humanizer eliminates the gamble. Your work remains yours—just protected from false accusation. We're not changing your message; we're ensuring it gets through without triggering broken detectors.

The academic system failed you. The detection tools betrayed you. The institutions abandoned you. But you're not powerless. You have options. You have tools. You have Ryne.

Stop asking why your essay was detected as AI. Start ensuring it never happens again.

The system is broken. We're your solution.

Welcome to Ryne. Welcome to control.

Ready to protect your academic work from false AI detection? Try Ryne's AI Humanizer now. Because your original work deserves to be recognized as exactly that—yours.

Is Ryne AI Reliable?

Stop wasting time with AI tools that promise everything and deliver mediocrity. Here's the truth: 2.1 million students didn't accidentally stumble onto Ryne AI. They found the one platform that actually delivers what every other AI study tool pretends to offer.

The End of Average Content

The content game just changed, and most of you are still playing by yesterday's rules. While you're pumping out another "10 Tips for Better Productivity" article that reads like every other piece of digital garbage cluttering the internet, the smart players have already moved on. They're not creating content anymore—they're engineering engagement machines that make your best efforts look like a kindergarten finger painting. Here's the uncomfortable truth nobody wants to admit: Average content is dead. Not dying. Dead. And if you're still churning out the same vanilla, SEO-stuffed drivel that worked in 2015, you're not just behind—you're extinct.