The AI Homework Dilemma

How students got the cheat codes while schools are still downloading the game.

Everyone knows the homework game is broken. Students pretend to do it. Teachers pretend to grade it. Parents pretend their kids aren't using ChatGPT. We’re all playing roles in an educational theater and AI just opened the curtains.

Students have started using artificial intelligence for their homework assignments. In fact, 89% of students do. That's not a typo. Almost 90 percent of students have learned what every clever operator knows: work smarter, not harder. For all the academic nuances around “academic integrity” by the educators, the students are many steps ahead, using technologies which make homework as obsolete as a typewriter for code.

The old guard calls it cheating. Students call it Tuesday. And Ryne Humanizer calls it exactly what it is: adapting to reality while everyone else clings to a broken system.

The Numbers Don't Lie (But Your Homework Policy Does).

Let's cut through the noise with hard data. As per a HEPI survey in 2025, three-fourths of university students use generative AI for assessments. It is noted that only 53 percent did a year earlier. According to a report by Carnegie Mellon University, there has been a “huge uptick” in academic responsibility violations. But here’s the thing most students do not realise they are doing something wrong. That’s because the rules are inconsistent, out of date, and utterly ridiculous.

At the University of Pennsylvania, academic integrity violations have become so complex that they've had to create an entirely new category: "Misuse of Artificial Intelligence." Meanwhile, research from Frontiers in Education reveals that only 13% of American teenagers were using ChatGPT for homework in 2023, but that number doubled to 26% by 2024. By 2025? The floodgates are open.

In AI-enhanced learning environments, students score 54% higher on tests than in traditional classrooms. Here’s what’s going on. Read that again. Using AI doesn't make students less intelligent - it has been shown to enhance learning The system is punishing creativity rather than cheating.

The Great Academic Theater Performance.

Casey Cuny is a 23-year veteran English teacher and California's 2024 Teacher of the Year. He said "The cheating is through the roof. It's the worst it's been in my entire career." But then he owns what any honest educator would tell you: "Anything you send home, you have to assume is being AI'ed."

The homework system is entirely based on mutual delusion used by all of us.

Teachers who assign take-home essays know students will use AI. Students hand in AI-generated homework knowing teachers know. AI policies created by the administrators differ from classroom to classroom which creates confusion and does no good. One teacher bans Grammarly while another requires it. A professor is using ChatGPT while their counterpart treats it as academic anthrax.

Stanford and MIT research confirms what's obvious to anyone paying attention: institutions that successfully integrate AI see measurable improvements in student performance, while those clinging to prohibition create environments of confusion and dishonesty.

The joke? While spending millions to harness AI, universities are also threatening students who use the technology for free. It’s like telling someone to drive with their eyes shut while teaching them to drive.

Why Traditional Homework Is Already Dead (It Just Doesn't Know It Yet).

Educators have a hard time acknowledging this: Yet, AI has not caused cheating – it has merely revealed homework is broken. The old one assumes that the students will struggle at home with the content which they barely understood in class, and through repetition, the students will somehow manage to attain enlightenment.

That's not education. That's hazing.

Smart students have always found workarounds. They have formed study groups, hired tutors, or just copied off the kid who got calculus. AI just democratized the process. These days students have their very own 24/7 tutor that never judges them, never gets frustrated and explains things in a way that makes sense.

Research shows that students benefiting from AI powered personalized learning have 30% better outcomes as compared to traditional learning. They complete courses at a 70% higher rate. Attendance increases by 12%. Dropout rates fall by 15%.

These aren't cheating statistics. Any sane education system would celebrate these success metrics.

The Real Dilemma: Adaptation or Extinction.

UC Berkeley just sent new AI guidance to all faculty with three options: require AI, ban AI, or allow some AI. The idea of banning artificial intelligence is still been thrown around in 2025. It shows just how sticky academia can be.

Meanwhile, students like Lily Brown, a psychology major at an East Coast school, navigate this maze daily: “Sometimes I feel bad using ChatGPT to summarize reading, because I wonder, is this cheating? Is helping me form outlines cheating?”

This confusion isn't accidental. The education system is lost. It does not know why we educate and that human is a myth. Are we teaching students to memorize what Google can tell them instantly? Are we really testing them on their ability to compose essays that ChatGPT can write in a few seconds? Are we getting kids ready for a time when using AI is as crucial as reading and writing?

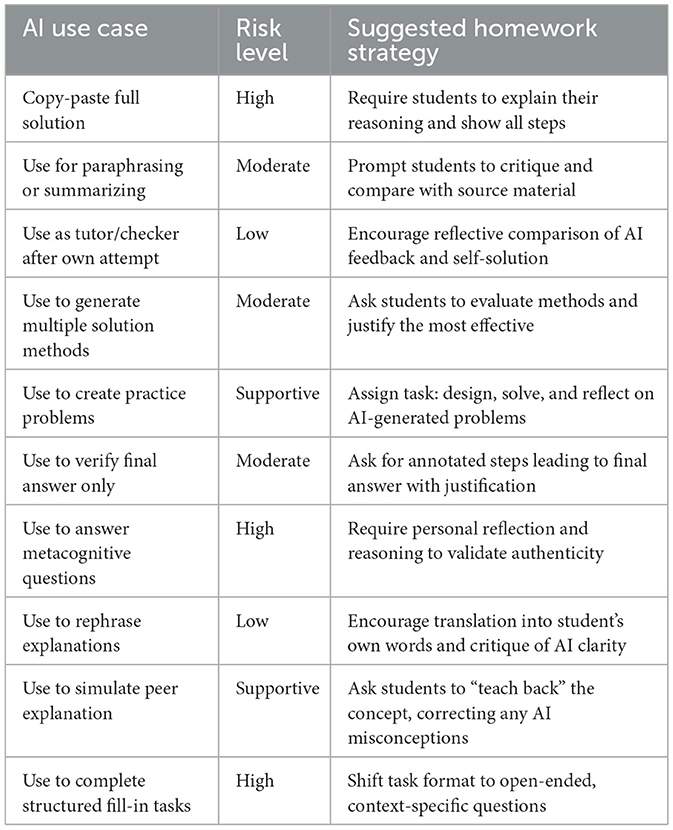

More educators are adapting to the latter more than the former as it is more successful. They are developing “AI-resistant” assessments that make use of personal reflection, creativity and more. Students should use AI as a tool and not a crutch.

Enter Ryne: The Solution That Actually Works.

While universities fumble with policies and teachers play whack-a-mole with AI detection software, Ryne built something revolutionary: an AI platform that enhances learning instead of replacing it.

Most students rely on ChatGPT to skip learning, not to learn. Ryne users leverage AI to accelerate it. Our portal doesn’t just give you an answer. It teaches you concepts, gives you feedback and gives you a solution based on your unique learning journey. Having a tutor or cheating on a test is one and the same.

And when students need to submit original work? Ryne A Ihumanizer ensures their AI-assisted learning translates into authentic, personal expression. We’re not helping students cheat, but we’re helping them succeed at a game for which the rules keep changing.

The establishment calls this controversial. Students call it essential. We call it obvious.

The Corporate World Gets It (While Academia Debates).

What drives me insane is that while colleges are arguing about whether this AI is “cheating”, the businesses already moved on. The global corporate e-learning market is approaching $44.6 billion by 2028 primarily due to widespread adoption of AI. Companies leveraging AI powered training see 57% more learning efficiency.

McKinsey says that workers with AI skills could add $7.9 trillion to the global economy. According to Microsoft, 92% of business leaders intend to ramp up their AI investments in the next three years. These are not companies we think of as throwing money at fads. They are investing because AI-enabled learning delivers measurable ROI.

At the same time 77% of workers around the world have expressed a desire to learn AI skills. They realize that knowing a thing or two about AI could be as important as knowing how to use a computer was in the 1990s. They're not troubled by “academic integrity” but by relevance.

The disconnect is staggering. Colleges get students ready for a workforce that wants fluency in AI, while also punishing them for building those skills.

The Path Forward (For Those Brave Enough to Take It).

It’s not a hard one to crack, but it requires the one thing that the academic world cannot do: concede that the old way is broken.

First, kill the traditional homework model. If a task can be finished wholly by AI, do not assign it. Period. Instead of repeating things, give assignment that make child think and reflect upon things.

Second, teach AI literacy like any core competency — because it’s a competency, too. Students need to understand not just how, but also when and why to use AI. Influencers should be informed of AI's limitations, biases, and uses. This isn't optional; it's essential.

Third, embrace transparency. Stop pretending that you’re not using AI, everyone. Create clear, consistent policies that acknowledge reality. An even better approach is to create curriculums that incorporate AI as a learning tool.

Some educators get it. Casey Cuny and other teachers are adapting by using writing, verbal assessments, and AI in class. Students Are Being Trained To Learn Using AI Instead Of Cheating With It They're getting students ready for the real world and not some make-believe world.

The Bottom Line: Evolution or Irrelevance.

Nobody wants to say it out loud but the homework problem is not about AI. It's about an education system that's failed to evolve. AI just made that failure impossible to ignore.

Students aren't cheating more; they're just adapting faster than institutions. The tools help them to learn more effectively, conduct better research, and work more efficiently. The company is getting ready for a time when AI workers are commonplace.

The schools that will survive are the ones that realize this and adapt. They’ll change the curriculum to be enhanced around AI instead of resistant to AI. Their measures of success will be based on their learning outcomes. They'll get students ready for the real world, not the one admin want to be real.

Ryne Humanizer exists because we saw this coming. We built tools that enhance rather than replace learning. We developed systems that ensure academic integrity but leverage the power of AI. We handed students the cheat codes to a flawed system—not out of a desire to help them cheat, but so they can win.

The AI homework dilemma isn't a dilemma at all. This opportunity serves as a ticket to success for the wise; ignorance can lead to one’s downfall.

Your move, academia.

Want to stop playing at outdated rules? Ryne is built for students who refuse to let a broken system hold them back. Our AI humanizer ensures your work maintains authenticity while leveraging AI's power. In the real world, being able to use AI efficiently is winning, not cheating.

For a complete tutorial on maximizing your academic potential with AI (while maintaining integrity), watch: Youtube.

Is AI Making Professors Obsolete?

Your professor isn't teaching you anymore—ChatGPT is. While universities pretend everything's fine, 92% of students are already using AI tools for their coursework. Not 50%. Not 75%. Ninety-two percent. And here's the kicker: students using ChatGPT with fake professor personas rate the AI higher in teaching clarity and competence than actual human professors. Let's cut through the academic theater and face what's actually happening in classrooms worldwide. Because while professors clutch their tenure and administrators count tuition dollars, students have already voted with their keyboards.

What Do the 2025 Numbers Say About AI in Education?

While the exclusivity to be in classrooms is debated, the students have opted for it. They're not asking permission. They're not waiting for approval. 88% of university students are using AI for assessments right now — up from 53% just one year ago, according to HEPI's 2025 Student Survey. That's not adoption. That's a takeover. This is what makes it so funny: While educators are fretting about “academic integrity”, students are literally using AI to deal with their anxiety, family conflict, and (yes) getting better papers done faster. The establishment strategy is checkers so they see removal rather than working toward winning the game.